- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY HOW TO#

- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY SOFTWARE#

- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY WINDOWS#

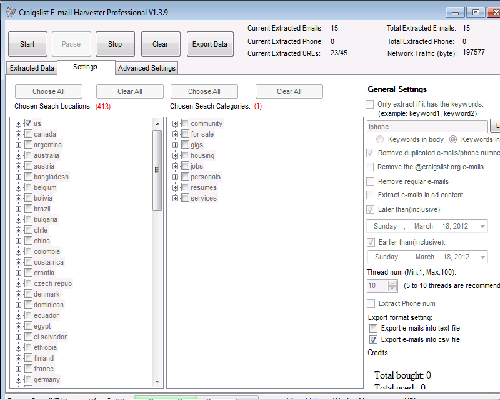

Please Note: Make sure you rename the parsing function to something besides “parse” as the CrawlSpider uses the parse method to implement its logic. follow: instructs whether to continue following the links as long as they exist.callback: calls the parsing function after each page is scraped.restrict_xpaths: restricts the link to a certain Xpath I will get every piece of public information out of a list of Craigslist ads: From all the written data to potential email addresses and phone numbers from. A general-purpose utility written in Python (v3.0+) for crawling websites to extract email addresses.The results can be exported to txt and csv formats.

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY SOFTWARE#

You can extract the email using your targeted keywords and the software will find the leads that have criteria with your keywords.

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY WINDOWS#

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY HOW TO#

The only Beautiful Soup issue MechanicalSoup has remedied is support for handling requests, which has been solved by coding a wrapper for the Python requests library.In the first tutorial, I showed you how to write a crawler with Scrapy to scrape Craiglist Nonprofit jobs in San Francisco and store the data in a CSV file. For example, no built-in method to handle data output, proxy rotation, and JavaScript rendering. Since it's based on Beautiful Soup, there's a significant overlap in the drawbacks of both these libraries. MechanicalSoup's request handling is magnificent as it can automatically handle redirects and follow links on a page, saving you the effort of manually coding a section to do that. This is especially helpful when you need to enter something in a field (a search bar, for instance) to get to the page you want to scrape. At the end you have the option to export it as a pdf, xlsm, docx, txt, json and csv. 3- starturls the list of one or more URL (s) with which the spider starts crawling. 2- alloweddomains the list of the domains that the spider is allowed scrape. You create a browser session using MechanicalSoup and when the page is downloaded, you use Beautiful Soup's methods like find() and find_all() to extract data from the HTML document.Īnother impressive feature of MechanicalSoup is that it lets you fill out forms using a script. This program is able to extract emails from pdf, json and txt files, some websites may apply, then it shows you which all domains found and ask you which one you want to export. Let’s check the parts of the main class of the file automatically generated for our jobs Scrapy spider: 1- name of the spider.

While the names are similar, MechanicalSoup's syntax and workflow are extremely different. You can't scrape JavaScript-driven websites with Scrapy out of the box, but you can use middlewares like scrapy-selenium, scrapy-splash, and scrapy-scrapingbee to implement that functionality into your project.įinally, when you're done extracting the data, you can export it in various file formats CSV, JSON, and XML, to name a few. It sends and processes requests asynchronously, and this is what sets it apart from other web scraping tools.Īpart from the basic features, you also get support for middlewares, which is a framework of hooks that injects additional functionality to the default Scrapy mechanism.

It comes with selectors that let you select data from an HTML document using XPath or CSS elements.Īn added advantage is the speed at which Scrapy sends requests and extracts the data. Scrapy is the most efficient web scraping framework on this list, in terms of speed, efficiency, and features. But don't let that complexity intimidate you. Unlike Beautiful Soup, the true power of Scrapy is its sophisticated mechanism.

0 kommentar(er)

0 kommentar(er)